“In-person is the gold standard,” I heard a colleague say. We were discussing the impact of the pandemic on team communications.

The impact of the pandemic, of course, is that there’s almost no in-person to be had. Our software development world is now almost entirely remote.

Our world was a long way from the gold standard prior to the pandemic. I was far from first to the party when, at Apple 30 years ago, I outsourced development to a programming team in Ohio. Despite the best intentions of the Agile Manifesto’s signers, when they stated, “the most efficient and effective method of conveying information to and within a development team is face-to-face conversation,” the trend continued with organizations scattering teams around the world, and some - some I managed - entirely remote.

But let me step back…

I was first introduced to agile in the form of XP 21 years ago, 1999, when I was at Schwab, and I soon began managing, leading and coaching agile teams. My first consulting engagement introducing agile was 10 years later. It was 2009, and I was advising a startup trying to find its path. While it had been following a north star that had mostly stayed the same, the path had veered every which way - a real challenge for a product team trying to execute what had been a six-month waterfall plan that was now into its 15th month.

Even a modicum of agile practices, I thought, would help this startup and its team. The team already had the building blocks: feature names in a spreadsheet. If we just ordered them effectively and developed in short iterations, the team ought to deliver a cadence of product increments with much earlier customer outcomes.

Before ordering features, I facilitated discussion of what “done” should mean - a definition team members could apply to every feature. Then we transcribed their features out of Excel onto 3x5 cards so we could

relatively size them, snaking the story cards back and forth on a conference room table until we had them in size order, waiting to make a second pass to add “points” numbers. The sizing was crucial to backlog ordering, in which we stack-ranked the features so they were ordered not just by value but, taking size into account, ROI. The ordered backlog then supplied fodder for the team to plan sprints, each of which would deliver a product increment - the highest-value features we could complete in two weeks. With customer-focused plans each targeting just two weeks, the team executed.

A colocated team plus practices and mindset. Relatively easy transition to relatively agile. Dramatically better than what they’d done before.

Lots of teams weren’t colocated, of course, maybe even most, but most of the ones reaching out to me, early days, were colocated in one or several distributed locations.

Scrum for Distributed Teams

When product development was in several locations, I sometimes found myself on the road, while other times was teaching remotely. I was soon delivering the presentation parts of training using some of Zoom's predecessors - Skype, WebEx, Google Hangouts and Adobe Connect. Jira and a ton of other tools provided a facsimile of cards on a wall. Definitions of Done could be collaboratively composed in Google Docs or Confluence. Wikis worked reasonably well for capturing retrospective observations and learnings.

But a key part of agile backlog grooming relies on ordering by ROI, or “bang for the buck”, which in turn relies on relative sizing of stories to supply the “buck” — the “I” in “ROI” — the relative investment required. I wasn’t much impressed with Planning Poker - I’d much earlier learned a technique much more powerful — the

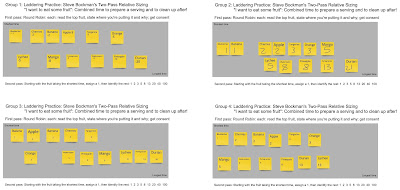

Team Two-Pass Relative Sizing method that Steve Bockman devised - snaking.

Snaking is a two-step process: first the entire team sorts the stories by relative time and complexity on a conference table, resulting in a snake of 80 or 100 stories in ascending order by how long they’ll take relative to each other.

|

Agile two-pass sizing by a colocated team: in a typical case, it takes a team 3-4 hours to snake 80-150 stories (in this case 120), from smallest story to largest epic and add points

|

Then, after labeling the smallest story card a ‘1’, the team continues to label stories 1s until there is a card that is clearly no longer a 1 but twice that, so labels it a ‘2’; and so on.

Less than half a day. Simple, fast, collaborative, and powerful when the team is in person. Even most distributed teams were in person - they were essentially groupings of in-person teams distributed from each other. But some weren’t. Some were entirely remote.

As simple as card-sorting and card-labeling seems, I’d found no tool to support it for entirely remote teams. I had been looking for years. Could card wall tools suffice? Nope, they’d never considered my use case. (No, not even Trello. Not even close.) Google Draw? Not really. Spreadsheets? Not on your life. List tools? Hard, very hard, to swap card order. PowerPoint, maybe? Put each story on a slide and switch to Slide Sorter view? But PowerPoint begins with cards in a grid - very different from starting from a stack of 3x5s and putting one at a time onto the sorting “table” in the relative position it belongs.

And then, an entirely remote team

I’d stopped looking for a workable tool when, in 2015, a team in rural Maine asked if I could fly out to help their product team be more predictable. One problem - while headquarters was in rural Maine, the programmers were not. At least most of them weren’t. Turns out there aren’t a lot of .Net developers in rural Maine. There lay the problem. The programmers were scattered across the country.

I described relative sizing to my new client - creating a snake of cards on a conference room table. And they described this tool called RealtimeBoard (now renamed

Miro) that they were using for retrospectives - virtual stickies on a virtual whiteboard - that they thought might do the trick.

I was stoked.

Miro was the first tool I’d encountered that really let entirely remote teams accomplish relative sizing.

It was pretty easy to get started with Miro. Much as we’d transcribed feature names out of Excel onto a stack of 3x5 cards, now we were scribing them onto a stack of virtual cards in Miro. (A few months later, Miro and Jira had API integration, at which point Miro auto-generated a bevy of cards, each an instance of a ticket in Jira.)

Relative Sizing

To teach teams the sizing technique, I start with a warm-up exercise, asking students to size fruits. We start with 12 fruits. Agile stories typically have a “why” and the why for all 12 fruits is the same — “I want to eat some fruit” — it’s just the fruits that differ.

|

| Fruit-sizing exercise — when teams are colocated |

The exercise is for the team to put the 12 fruit cards in order: not based on how long to eat the fruit, but based on the effort required - the combined “cost” of preparing a serving of the fruit and cleaning up after eating it (much as, in software, we need to combine development and testing efforts). Twelve cards are a small enough number to get a quick first experience with snaking. I give teams five minutes to put them in order by effort, three more minutes to number them with the usual modified set of Fibonacci numbers.

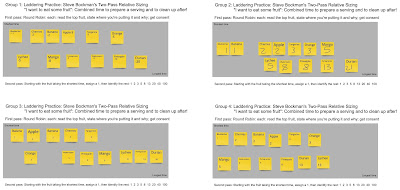

It turned out that Miro was a pretty good tool for remote teams to snake the relative cost of 12 fruit.

|

| Fruit-sizing exercise — 4 teams worked simultaneously, each on their own “sorting table” |

The more complicated next exercise is a scrum-ified version of the XP Game, in which teams size then order a backlog of puzzle and game activity "stories" (for example, sorting cards or calculating a bunch of sums), then plan and execute short sprints, their goal to deliver the most customer value. Here, Miro was able to not only emulate conference-table sizing, but also a card wall from backlog to sprint plan to user-acceptance-test to done, as well as the activities themselves.

|

| The Scrum Game —three teams working simultaneously, each with their own stories, sorting table and card wall |

Finally, I facilitate workshops during which teams size stories from their own software projects. It’s common for a team to have fifty or eighty or a hundred or more stories in its project backlog. Provided we limit the number to a maximum of 150, we size them all.

The most recent

Study of Product Team Performance - a survey of teams all over the world - revealed that

higher performing teams tend to work from backlogs more than three months long. And those teams have sized not just the stories selected for the next iteration but all of their backlog’s features, epics and stories.

Comparing Miro with a real conference table

During snaking, when the team gets beyond a dozen stories and wants to insert a story somewhere in the middle, the difference between cards on a table and an online Scrum board becomes apparent. Making physical space on a table is something we learned to do as children. Whereas we have to learn the interface to leverage an online tool to move a bunch of cards at a time.

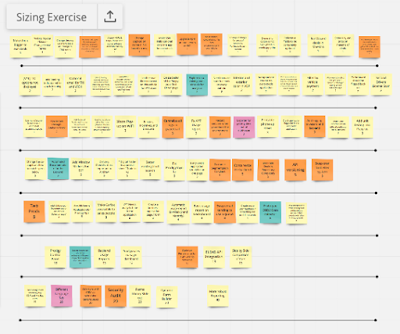

On a real-life table, we usually snake the cards back and forth. But I discovered that, on a virtual table, rather than snaking cards back and forth, it is easier to organize the cards in rows, one above the next, snaking from the end of each row of cards back to the beginning of the next row, each row arranged from smallest to largest, left to right.

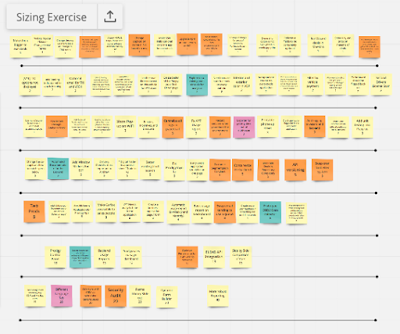

|

| Snaking a virtual team's stories is more easily done in rows |

In my experience, where snaking is easier on a table, rows are easier in virtual space. If the team developing Miro ever delivers a feature to automatically insert a card into a matrix of rows of cards, it may make virtual sizing easier than real-space sizing! But for the moment, in-person - people proximity - the gold standard - still wins. But Miro is pretty good. It integrates with Jira. And I’m delighted to report that Miro is no longer alone in providing this functionality. A year ago I was engaged by a client already using a remarkably similar tool,

Mural.

Delivering a Scrum experience

One of the things my clients love about my scrum trainings is that they're immersive. I run classes as agile projects. I put up a card wall with a backlog of relatively sized "learning stories" that have been ordered to always be delivering the next-highest-value learning. Much as we do with software project stories, my learning stories have relative story points. At the end of one-hour “sprints,” I update a Burn-Up Chart, yielding emergent velocity that predicts how many of the learning stories in our backlog we will likely complete by the end of class. This makes the class experiential — I’m not just teaching about scrum, but immersing my classes in it.

Setting all that up for real-world teaching is time-consuming. A few days beforehand, learning stories get handwritten onto scores of giant stickies for each class. Day of class, I arrive 45 minutes beforehand to transform a classroom wall into a backlog of learning stories poised to, one by one, march across the card wall, from “Backlog” through “In Progress” to “Done”. I prep a second wall with flipchart pages: one the burn-up chart, others blank to record students’ hopes and wants from the class, and later what they’ve learned and will take away.

While learning Miro and getting my first remote workshops set up was as tedious and slow as getting ready for my first classroom trainings years before, it provided a remarkable facsimile. Even better, it turned out downright handy for subsequent classes: once I'd set up my online Scrum board for the first class, I found I could save it off and reload the setup as the basis for subsequent classes.

|

| Training scrum board of learning modules, each notated with a relative story-point size, as class begins |

Scribing student hopes for their learning - and, hourly, updating a burn up chart - were similarly straightforward

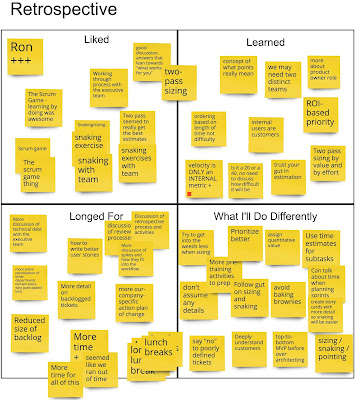

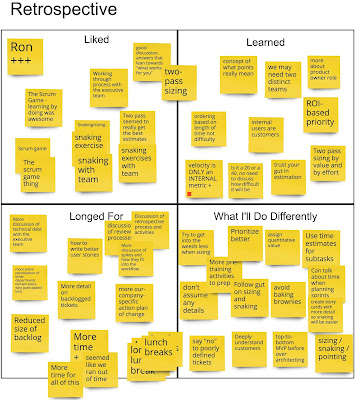

Retrospectives, too

That first client five years ago that introduced me to Miro had been using it for Retrospectives. Bobbie Manson from Mingle Analytics had been leveraging Miro to run one of the best agile Retrospectives I had yet seen. (I emulate her approach when, at the end of my virtual classes, we retrospect on the training, both to help students cement their learnings and to get feedback on what I can improve.)

|

| Retrospective by students, after three days’ agile training & workshops |

Because I think it’s been the stumbling block for distributed teams, though, it’s snaking - the agile relative sizing practice, in which we use cards on a virtual whiteboard in place of cards on a table - that makes using Miro and Mural so expedient. Relative sizing, because it forms the backbone on which velocity and predictability are based, is one of the practices I see teams continue to heavily leverage long after class completes. And the tools' usefulness is significantly enhanced by their integration with Jira: epics and stories are easily exported from Jira into Miro or Mural; when the team determines relative points and writes them on cards on the Miro board, they are automatically updated through the API to the tickets in Jira.

As Steve Bockman, relative ordering’s creator, has noted, the ordering technique is equally useful for relative valuing:

- Product owners snake stories from most-value-to-customers to least.

- Product organizations snake project opportunities from most contribution to company objectives to least.

- Tech leads and architects snake tech debt and other technical product backlog items from most urgent and highest risk to least.

- Engineering leaders snake engineering, infrastructure and debt projects the same.

Relative value divided by relative size yields ROI - return on investment - bang for the buck. It’s a useful guide to seeing what to do first and next and next after that.

Prior to the pandemic, I had trained teams spread across as many as a dozen different geographies as well as remote teams on other continents. Then, a combination of Skype and Miro had not only let me train teams in remote locations and scattered across geographies, but also enabled those distributed teams to continue to use agile’s powerful, collaborative techniques and practices after I was gone.

Having five years of experience with a remote collaborative tool gave me a major headstart to serving suddenly remote teams with the onset of the pandemic. A combination of high quality conferencing like Zoom and virtual whiteboarding like Miro and Mural provide a serviceable stand-in for physical cards, card walls, sizing, ordering, charts and all the rest - for every team.

Interim VP Engineering,

Interim VP Engineering,